👁️🗨️ Entrance observer

Main client service is beehive-entrance-video-processor, it needs to run on the edge device to capture and send data to web-app. Our main priority is inference on the edge device, but we also want to have hybrid inference with cloud support.

Video processing, playback and analytics

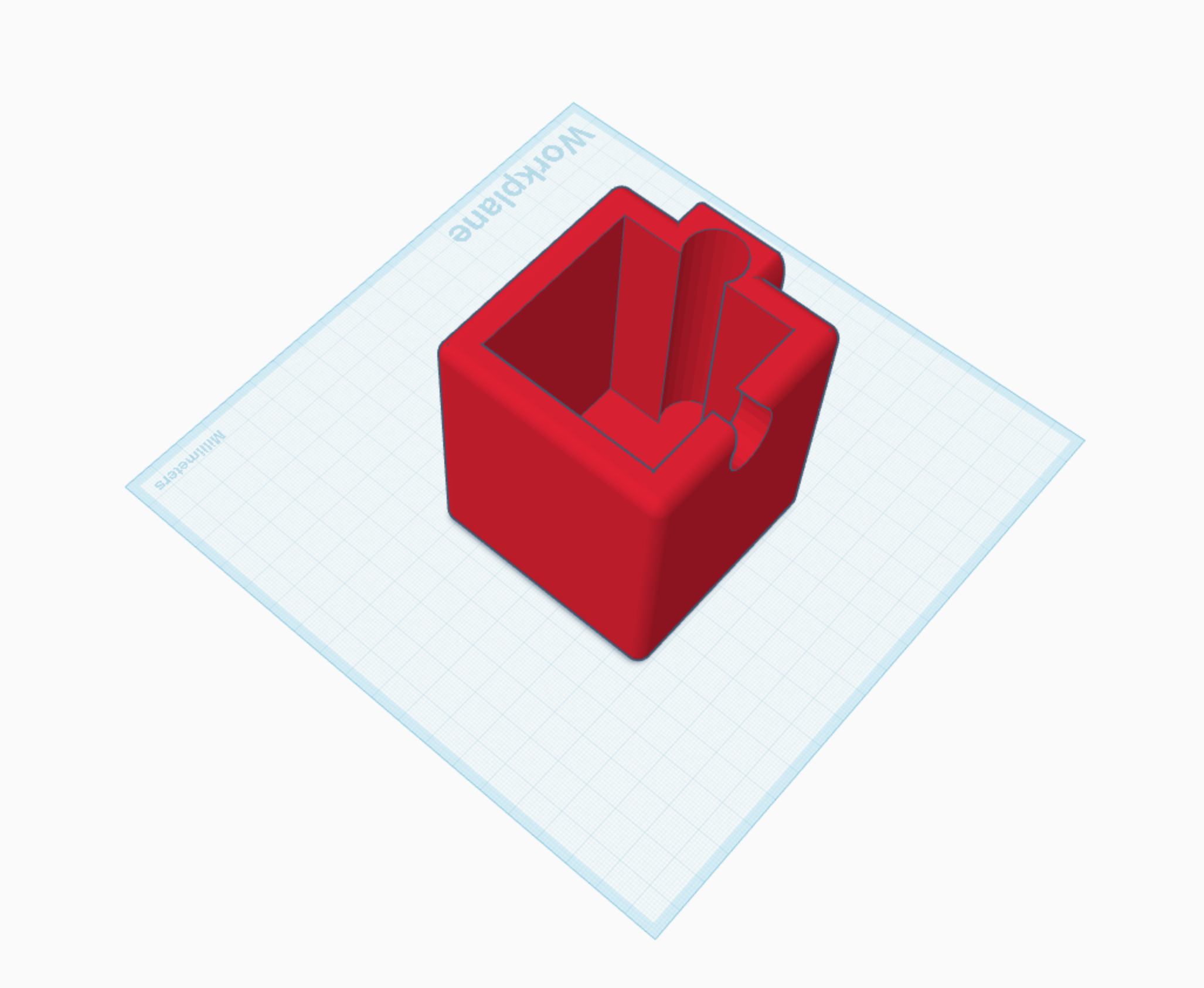

Camera protection cover

Choosing processing architecture

We can approach where to process video from different angles:

| Where | Pros | Cons |

|---|---|---|

| Edge device without GPU raspberry-pi ex. 🇨🇿 BeeLogger, BeePi | - cheap ~ 95 EUR for the board | - limited to simple numerical models - may not be reliable |

| Edge-device with GPU (jetson nano) ex. 🇩🇪Apic.ai, 🇦🇺Beemate, 🔬BeeAlarmed. Masters thesis | - efficient - low network dependency - can work offline with own GPU | ~ cost 230 EUR for the board alone |

| Hybrid: - on-premise (local) GPU workstation - Video streaming devices | - lower cost in total | - higher initial cost for the device - need of dedicated workstation location |

| Cloud-only, ex. LabelBee | - need high network bandwidth - need to optimize for variable network bandwidth - expensive - video streaming and processing cost - video storage cost | |

| Specialized PCB devices | - energy efficiency - low production cost | - usually low on RAM, GPU - high development cost |

| On a mobile phone | - price controlled by the customer - has built-in networking - has a camera - has screen - has battery & power management - no vendor-lock - easiest to get started - easy for beekeeper to setup - automatic app redeploys | - high variety of phones, inconsistent experience - for processing on the phone, issues with GPU, need to use custom mobile tensorflow - too high-level (in-browser), hard to handle exceptions & may need user intervention |